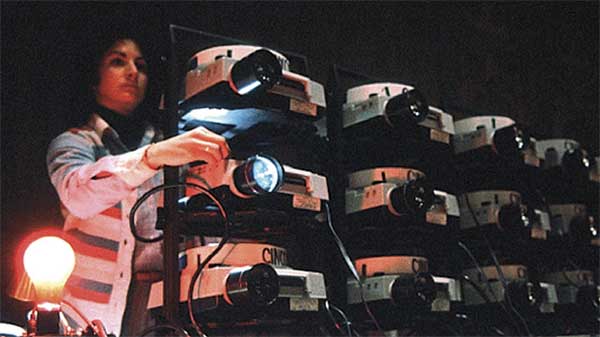

When I joined Dataton, we were just getting started in what was then known as “multi-image”. That was the technique of using multiple slide projectors to create large image surfaces, typically using edge blending or by projecting onto oddly shaped surfaces.

Sounds familiar?

We were somewhat late to the game, already dominated by companies such as AVL, Arion, Clearlight and Electrosonic, so we had some catching up to do. Which we did in short order. Our PAX slide projector control unit became not just the neatest and most reliable box for doing multi-image, but was also equipped with smart software to run shows produced for our competitor’s systems. This feature got us in the door with major production and staging companies. Reverse-engineering those systems’ control protocols and intricate details – down to the minute details of their lamp fade curves – was a lot of fun (and work!).

Making multi-image wasn’t just about those projector control units, but also about the software used to produce the shows. After some initial stand-alone products (such as the tiny MIC3), I came up with MICSOFT. This was initially created for the computer of choice back then – the floppy-disc based Apple II.

This was before the computer mouse was invented, so instead I designed MICSOFT to use a joystick to do some of the programming, including manual creation of lamp fade curves.

The Apple II was then largely replaced by the IBM PC. There was no Windows at that time, so programs were written for MS-DOS. Each program had its own idea of what a user interface should look like. MICSOFT PC had to be re-written from scratch (the Apple II version was written in 6502 assembly, due to the limited memory of the Apple II, while the PC version was written mostly in C).

The entire system was still very much focused on controlling slide projectors. However, that era was clearly fading out, so to speak. We needed something else to sell. As a side-note; I guess our competitors didn’t quite see this coming, as most of them disappeared along with the slide shows.

What grew out of multi-image was what we came to define as “true multi-media”. Back then, the term “multi media” described pretty much anything you did with sound and images on a computer. What I saw was the production community’s desire to use video, lighting and other special effects in their large-scale presentations. Hence, I started looking into ways to incorporate a variety of AV equipment that seemed useful in such “true multi-media” presentations.

I investigated Betacam decks, Laserdisc players, tape decks, Photo CD, moving lights, pyrotechnics, computer graphics, DVI switchers, video projectors – you name it. This was quite a challenge, since the interfaces used by those devices were all over the map. Some were still electro-mechanical (such as cassette and open reel tape decks), while others had various proprietary serial control protocols.

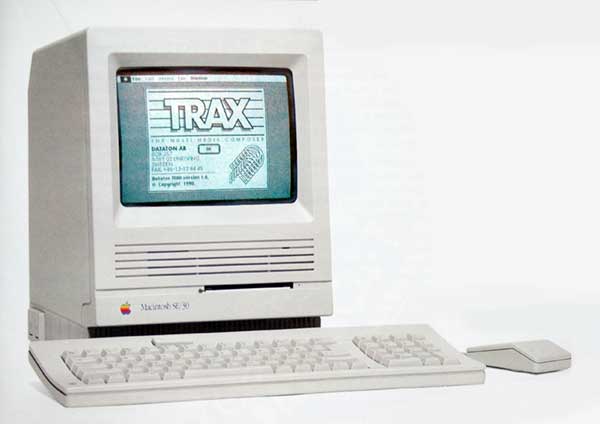

The end result of all this research and head-scratching was our next generation product, built around the SMARTPAX control unit and the Macintosh-based TRAX software. In one way, SMARTPAX was similar to the PAX slide projector controller. It had four control ports on the back, and connected to the same system bus. However, internally it was a totally different beast. I came up with a multi-tasking OS along with a dynamically configurable, multi-tiered, set of device drivers to control all those AV devices.

The Mac had by now also become a force to reckon with. Especially among creative people. Windows was there on the PC side, but I didn’t really like the look of it. I got hooked on the Mac, and started working on TRAX. Again there was a lot of work trying to figure out how a user interface should look and behave to program slide projectors as well as a variety of other AV devices. There wasn’t anything similar around for me to look at, except possibly some MIDI sequencers. So it took a while to come up with the (now seemingly obvious) timeline-with-cues metaphor.

I continued developing TRAX for a number of years, adding advanced capabilities such as multiple timelines (later to find their way into WATCHOUT) as well as the ability to create touch panels and wire up their behavior using a kind of graphical programming language.

Commercially the system was quite successful for its time (meaning, it allowed us to survive the years following the death of multi-image). But in the end, it became rather complex from a technical point of view, with its many SMARTPAX drivers, device adaptor cables (some with their own built in intelligence), touch panels and TRAX itself.

We were now reaching the end of the 90’s, and it became increasingly clear to me that this complex, multi-headed, hydra of a production-and-control system was not the way forward. Computers were now fast enough to do most of this stuff on their own, without all the other junk in between. However, with Dataton being predominantly a hardware company, suggesting an entirely software-based solution was a pretty hard sell.

But as I could clearly see things were heading in this direction (and, frankly, were sick and tired of maintaining TRAX), I took a real, hard look at where things stood during the spring and summer of 1999.

Computers were now cheap and fast enough to to replace most of the other AV gear of the past. Hard disks were getting sufficiently roomy to hold the media files. Ethernet made it easy to connect multiple computers with good performance. The gaming industry brought forth cheap graphics cards with amazing performance and frame buffers that could handle a couple of full resolution images. Video playback was also approaching usable resolutions (until this time, most computer video had been the size of a postage stamp).

It all came together as this “perfect storm” that made the light bulb turn on in my head. Not only could I use this to get back to the roots of multi-image. This could also result in a comparatively simple system, yet capable of doing what most of our customers were trying to do with all those laserdisc players, betacam decks, video projectors and DFS-500 video switchers controlled by TRAX.

WATCHOUT

I started developing what was to become WATCHOUT in August 1999. At that time, it was internally referred to as “VideoTRAX”. After some initial proof-of-concept tests, the development moved forward rapidly. As the previous product generation was clearly running out of steam, and with the bank breathing down our neck, we desperately needed a new product. So a lot of hope was pinned on WATCHOUT. And an aggressive launch date was set for mid December. Giving me a nerve-wracking four months to get the product presentable.

Not only did I have to do the product in time, we also had to make an impressive show to launch it with. We worked with a couple of talented guys from Gothenburg on the show, while simultaneously trying to get WATCHOUT to a state where they could actually use it to produce the show. They came up with a lot of clever content, all of it optimized to show off WATCHOUT in ways that looked really impressive. The grand finale was a full width, fly-over, water scene, with the WATCHOUT logo splashing up through the waves at the end. To their despair (they’ve spent days rendering the scene in 3D at full resolution), we had to cut most of it out, since the moving horizon line during the approach made it obvious that the computers driving the five projectors weren’t really that well synchronized. (Back then, they were just started together, with no active synchronization across computers.)

Launch Day

At launch day, we first had a short introduction done by a well known slide show producer (Douglas Mesney), using a small tripod screen and a single projector – powerpoint style. It was deliberately made somewhat low-key and boring, just to make the contrast even starker. We then carried the tripod screen off stage and revealed a huge screen, stretching across the entire auditorium. We had five projectors lined up to run an edge blended, very wide format presentation. We were using small, office-grade, DLP projecors – another brand new technology that was sorely needed. (LCD projectors back then lacked the contrast ratio required to handle edge-blending properly.) All in all, the launch was a great success.

This first generation of WATCHOUT was somewhat odd in that the production software ran on Windows, while the display software ran only on the Mac. The production software was written in Java, which was the hot ticket item for desktop software at the time. And Java only ran well on the PC. However, due to my background on the Mac, I wrote the display software for the old “classic” Mac OS (this was just before MacOS X). It didn’t really run that well on the G3 PowerMacs I had during development, so we were getting a bit concerned whether we would be able to run that full-screen video at all. Luckily, the G4 generation was introduced around this time. By bringing an Apple representative in to do a little spiel as part of our product launch, we were able to scrounge the very first G4 computers in the country for our product launch.

Version 1

Needless to say, the product was far from finished when we launched it. It took me a few more months of hectic development to get all things working, write the manual, and tie all loose ends together to make WATCHOUT useful. And even then, it was quite rudimentary in many ways. The lack of an Undo command, for example, caused our customers grief (not to mention getting into the habit of saving often, and keeping lots of backups). But the fact that WATCHOUT was pretty much the only game in town for this kind of presentations (and remained so for several years), and looked really stunning on screen, allowed us to start selling it anyway. And in the process probably saving the company.

I kept pounding on this first version for a couple of years. As MacOS X came along, we were eventually able to make the production software run there too. This was due to the fact that I chose to write it in the cross-platform Java language. Unfortunately, it took a while for Apple to get Java working on the Mac, since they were busy getting MacOS X out the door. So for a while, we had the odd situation where the display software ran on MacOS 9, while the production software required MacOS X.

Since MacOS 9 was end of life, and the graphics and video subsystems of MacOS X wasn’t quite up to speed yet, we found ourselves “between a rock and a hard place” as far as the display software was concerned. We also had a lot of requests for supporting PCs here. The PC gaming market had exploded, resulting in very cheap but powerful computers from a number of competing suppliers.

I ended up porting the display software over to Windows to get us out of this crunch. So by the end of the v1 cycle, we had both the production and the display software running on Mac and PC.

Version 2

After moving over to the PC side of the fence, a whole slew of new opportunities opened up. Chief among those were the ability to integrate live video and powerpoint using capture cards, which were then becoming available. Computers were also getting faster, allowing me to add support for full HD video. This all led to the introduction of version 2 in 2003.

A downside with having the production software written in Java, while the display software was written in C++, was that I couldn’t share any code between the two. Also, getting the kind of playback performance needed for full fidelity preview in the Stage window just wasn’t possible. By now, it was also clear that Java wasn’t the be-all and end-all of desktop software it was once cracked up to be. Once Sun Microsystems (who owned Java) sued Microsoft, things fell apart here pretty fast.

Version 3

So in version 3, introduced in 2005, I re-wrote the production software from scratch in C++ as a Windows application. The downside of this decision was that we had to leave the Mac platform, saddening many users (myself included). The upside was that once this was done, we could get far better performance and Stage window preview, as well as a significant amount of code sharing between the production and display software components. By now, I had also brought another guy on board to help out with the development.

Since the re-write took a lot of effort, there wasn’t a whole lot of new features in this version when it was released. But by now, the product was clearly off the gound, and there was enough interest from the market – along with new streams of customers – for that to be less of an issue.

Version 4

In version 4, I really tried to make up for the lack of new features in version 3. Introduced in 2008, it brought along numerous new capabilities such as compositions (well known from After Effects and similar programs), multiple timelines (an idea I first pioneered in TRAX), external inputs using MIDI, DMX and other protocols, all tied together through the task window (also stolen from TRAX).

I threw in some output capabilities for networked devices, serial ports and DMX512, resulting in a bit of a flash-back from the SMARTPAX days.

Version 5

The key features of version 5, introduced in 2011, were the ability to drive up to six displays from a single display computer, support for stereoscopic presentations, some 3D capabilities, and the Dynamic Image Server. Sterescopic images (or “3D”, as it was commonly referred to), was the big thing in TV sets that year, but never really took off in the living room.

Midway we released version 5.5, along with the WATCHPAX. This version also added hardware assisted synchronization, finally enabling the use of WATCHOUT in applications requiring 100% frame-accuracy, such as large LED walls.

Version 6

The culmination of 15 years of effort, version 6 was introduced in 2015. The key feature was the full support of 3D. Both in the form of bringing 3D models into WATCHOUT, as well as the ability to place projectors anywhere in 3D space. While WATCHOUT had been used for 3D mapping for years, those new features made this an integral and natural part of WATCHOUT’s workflow, rather than producing and “baking” all the 3D as video and then just using WATCHOUT as a playback engine.

By now, my development team had grown quite a bit, allowing us to move forward on multiple fronts. Thus, other areas that also saw significant enhancemenets in version 6 were a re-vamped user interface, multi-channel audio playback, support for additional video codecs as well as playback of uncompressed image sequences.

Epilogue

Congratulations if you made it all the way down here! This was supposed to be a brief history of WATCHOUT, but came to encompass quite a bit more. You see, it’s all connected. It’s hard to start somewhere in the middle, as it all links back to what came before. Here, at the very end of 2015, after leaving Dataton a couple of months ago, it felt like a good time to summarize things.

Leaving WATCHOUT in the capable hands of my former colleagues – some of whom have worked with me for years – I feel confident that there will be many new and exciting features to look forward to. Although now from a somewhat different vantage point, I intend to stay close and continue working with talented WATCHOUT users all over the world. I’m developing new software and services to work alongside WATCHOUT to further enhance what can be accomplished. My hope is that this will allow the total to become greater than the sum of its parts.

In closing, I can’t help but to feel a bit of deja-vu around WATCHOUT, reminiscent of how the previous TRAX product generation felt during the late 90’s. After developing a piece of software for such a long time, it becomes rather complex. While I believe I’ve done a decent job keeping WATCHOUT “shockingly simple” on the surface (our slogan when we launched the product in 1999), there are now a lot of moving parts to to take into account internally, making the process heavier. This reminds me of a saying I used to have on the wall in my office; “Inside every large program is a small program, struggling to get out.”

Stay tuned!

Mike

PS: To keep up to date with what’s coming down the pike, don’t forget to sign up for my newsletter.